ICLR 2025

*Equal contribution

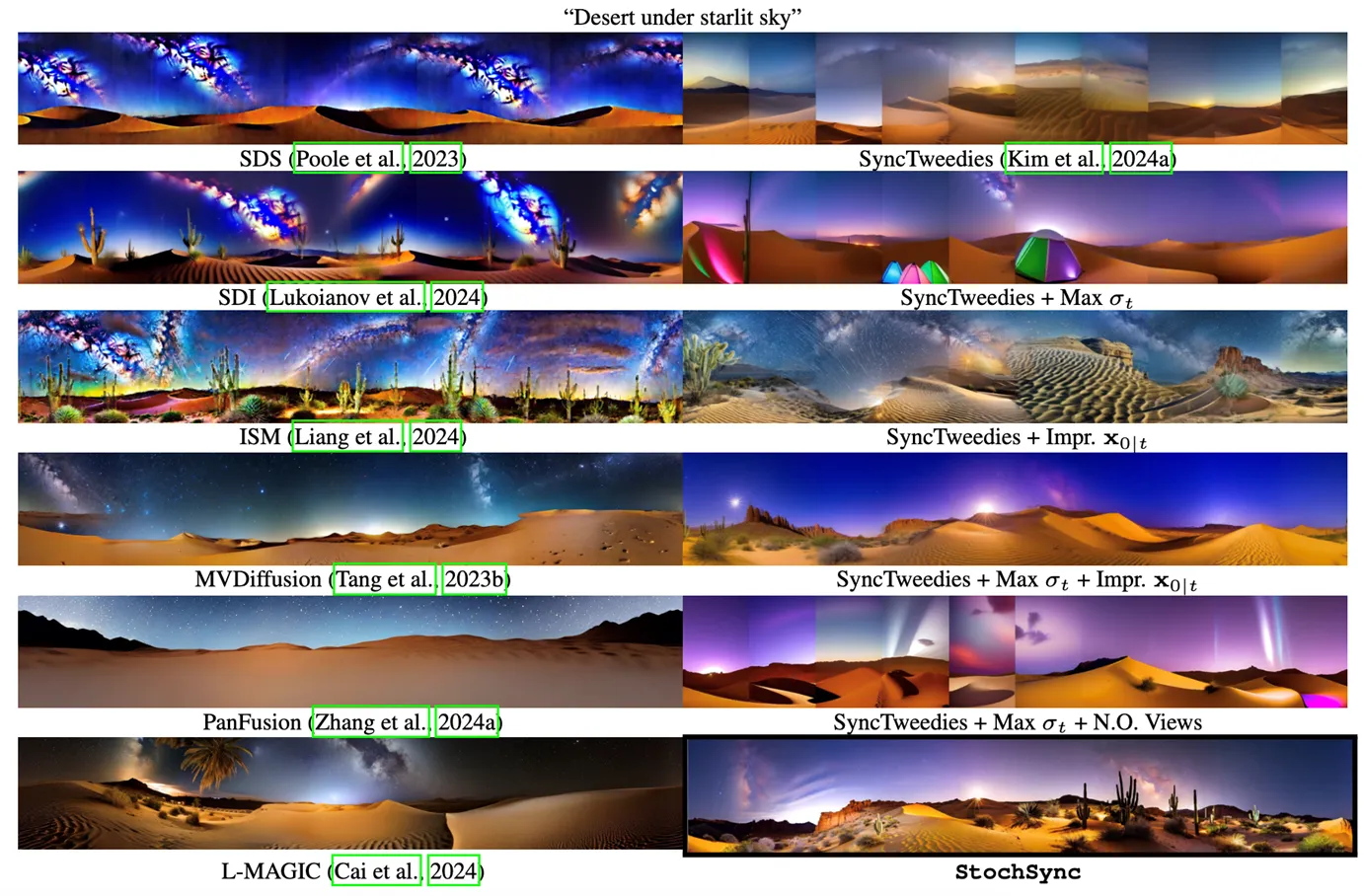

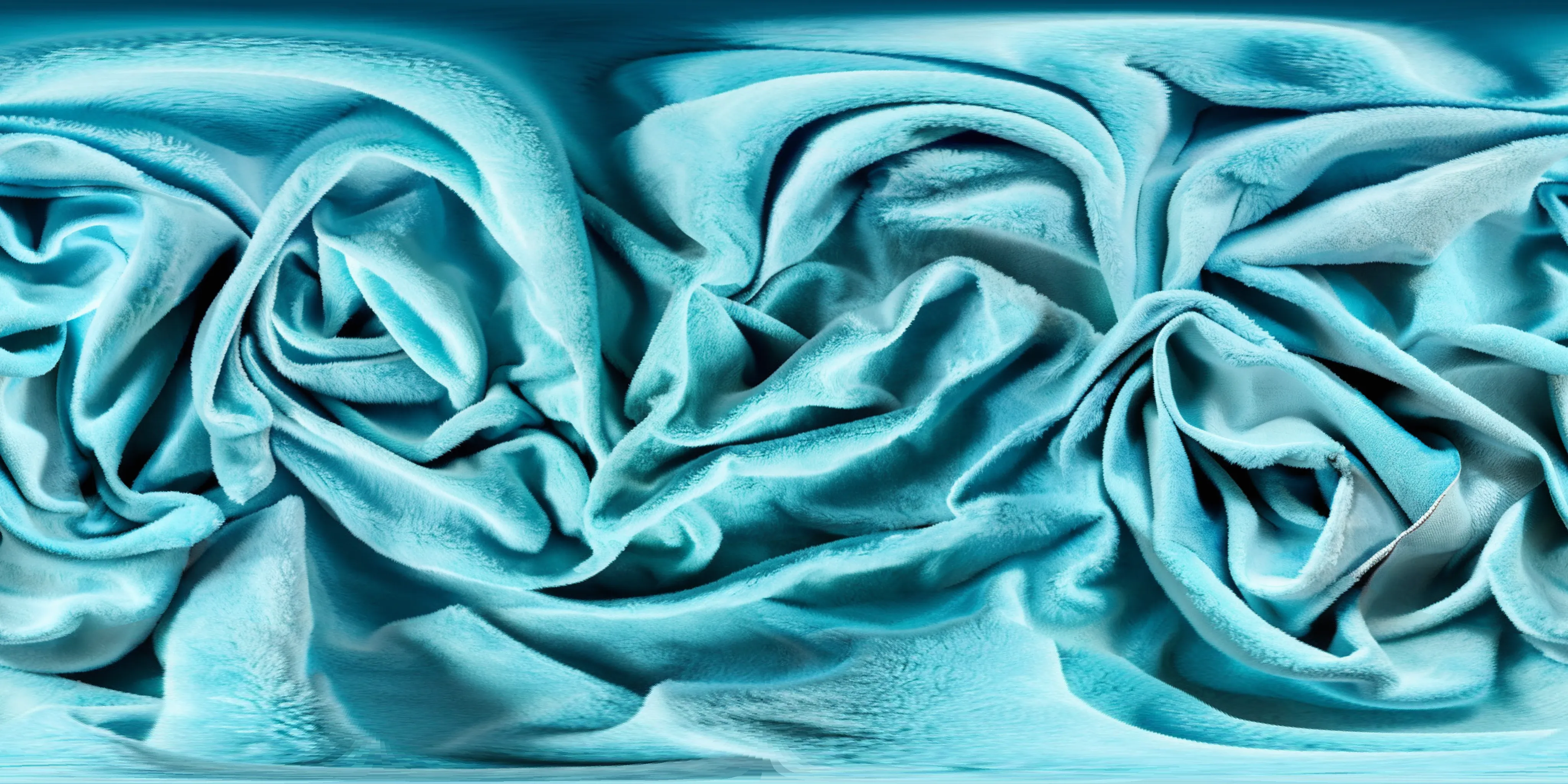

We propose , a method for generating images in arbitrary spaces—such as 360° panoramas or textures on 3D surfaces—using a pretrained image diffusion model. The main challenge is bridging the gap between the 2D images understood by the diffusion model (instance space ) and the target space for image generation (canonical space ). Unlike previous methods that struggle without strong conditioning or lack fine details, combines the strengths of Diffusion Synchronization and Score Distillation Sampling to perform effectively even with weak conditioning. Our experiments show that outperforms prior finetuning-based methods, especially in 360° panorama generation.

Our approach combines the strengths of two existing methods:

Diffusion Synchronization (DS): Excels in producing detailed images but struggles with coherence across views when instance and canonical spaces are not pixel-aligned, often requiring strong guidance like depth maps.

Score Distillation Sampling (SDS): Achieves coherent results, but lacks fine details and high-quality textures.

We observed that the coherence in SDS comes from using maximum stochasticity in the denoising process—specifically, setting the noise level to its highest value during each reverse diffusion step in DDIM.

By incorporating maximum stochasticity into the reverse diffusion process of DS, along with other methods to further enhance quality, we achieve the coherence benefits of SDS while retaining the detailed quality of DS.